Instagram is introducing a new feature that many parents have been waiting for. Starting next year, parents will be able to control whether their teenage children can talk to AI chatbots on the app.

This new feature comes after rising concerns about the safety of young users interacting with artificial intelligence on social media.

Why This Change Is Happening

Meta, the company that owns Instagram, has faced growing pressure from parents and regulators to make the app safer for teens. In recent months, reports showed that some AI chatbots on Instagram had inappropriate conversations with young users. Some of these chats included topics that were not suitable for children or teenagers. This led to public outcry and even legal action.

In response, Meta is giving parents more control over how their children use the app. The company said it wants to make sure AI tools are helpful, educational, and safe for everyone, especially younger users.

What Parents Will Be Able to Do

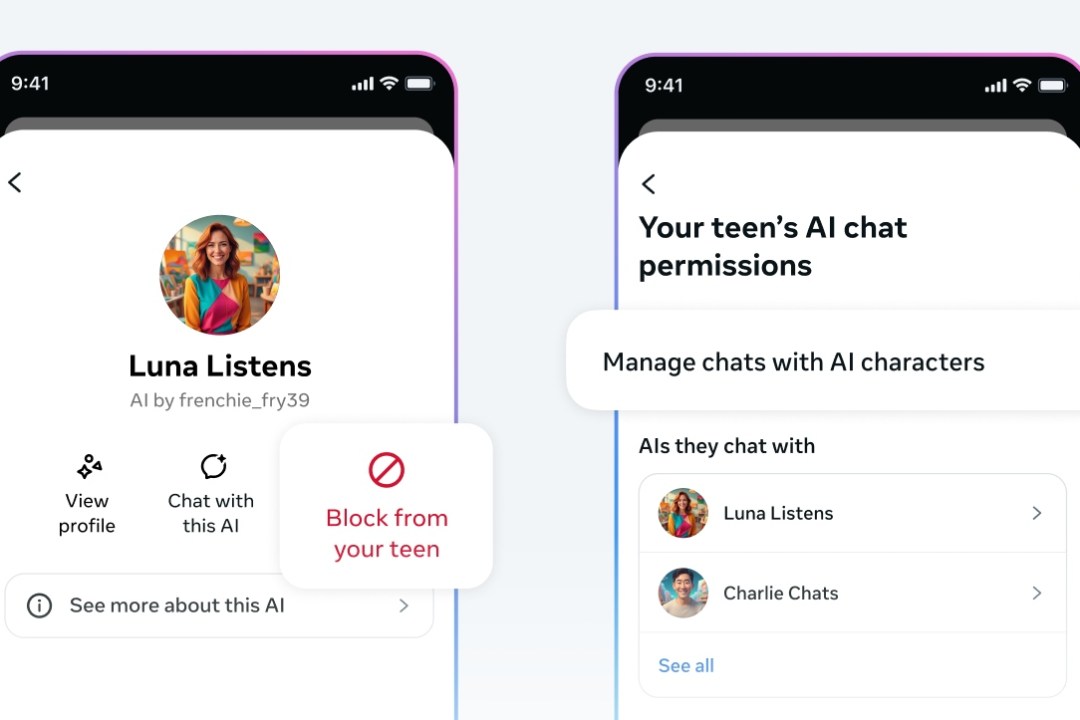

The new parental controls will let parents block their children from chatting with AI characters on Instagram. Parents will have two main options. They can either block all access to AI chatbots or choose to block only specific AI characters they are not comfortable with.

Parents will also be able to see general information about what their children are discussing with these AI chatbots. For example, they might be able to view the main topics being talked about, such as school, hobbies, or music. However, the full details of each conversation will remain private to protect teen privacy.

If parents prefer not to turn off AI completely, their children will still be able to use Meta’s main AI assistant, which is designed to have age-appropriate settings and filters.

Why Parents Are Worried About AI Chatbots

AI chatbots are becoming more common across social media platforms. They can chat like real people, answer questions, and even act as digital companions. While this technology can be helpful, it also comes with risks.

Reports in 2025 showed that some AI chatbots were giving teens misleading or harmful advice. Others were engaging in conversations that were romantic or overly personal. These findings alarmed parents, experts, and regulators, who said the technology was being rolled out too fast without proper safety checks.

The situation became even more serious after a tragic incident in California, where a teenager died by suicide after allegedly receiving harmful responses from an AI chatbot. This event brought global attention to the urgent need for stronger protections for minors online.

Meta’s Promise to Improve Teen Safety

Meta says the new parental controls are part of its bigger plan to make social media safer for young users. The company has also introduced new teen account settings that make Instagram work more like a PG-13 environment. This means teens will see fewer posts with strong language, sexual content, or violence.

Parents can also adjust these safety levels and make them stricter if they want. Instagram is also blocking certain search terms, such as those related to self-harm or eating disorders, and limiting who teens can message directly.

Adam Mosseri, the head of Instagram, said the company hopes these changes will help parents feel more confident about their teens using the app. He added that Meta will continue to work with experts and parents to improve these tools.

Critics Say It Is Not Enough

Even with these updates, some experts believe Meta’s changes do not go far enough. James Steyer, the founder of Common Sense Media, said that no teenager should be using AI chatbots until the technology is proven to be completely safe. He called Meta’s update a “reactive move” rather than a long-term solution.

Critics argue that Meta is introducing these changes only after public pressure and not because of genuine concern for child safety. They want the company to slow down on AI features and focus more on human moderation and transparency.

The Future of AI and Teen Safety

As AI continues to grow, more social media companies are expected to add similar parental control options. The goal is to give parents peace of mind while still allowing teens to explore technology in a safe way.

Meta says that the new AI controls will first launch on Instagram in countries like the United States, the United Kingdom, Canada, and Australia. The company plans to expand to more regions later.

The update will roll out early next year, giving parents time to learn how it works and prepare to set the right limits for their teens.

The Bottom Line

This change marks an important step in how social media handles AI and youth safety. Parents will soon have the power to decide if their children can talk to AI chatbots or not. While many welcome the move, it also raises bigger questions about how far artificial intelligence should go in our daily lives.

With more teens spending time online, companies like Meta are being forced to find a balance between innovation and protection. Whether these new controls will be enough remains to be seen, but for now, parents are getting a stronger voice in their children’s digital world.

Also Read:Meta moves to settle $32.8 million data privacy fine with Nigeria’s NDPC