Welcome to Bintu's Art and Everything

World Class Digital Marketing & HR Services for Growing Businesses

Bintu’s Art and Everything is one of the leading digital marketing agencies in Nigeria and a trusted name among the top HR consulting firms in Nigeria. We help businesses grow with digital marketing strategies that increase revenue, boost visibility, and attract the right customers.

We also provide specialized HR services that focus on recruiting and managing the best Digital and creative talent. These are designers, content creators, social media managers, and marketers who can take your brand to the next level.

Who We Are

Bintu’s Art and Everything is a leading digital marketing agency in Nigeria, specializing in creative branding, digital marketing, and content creation. We are also one of the top HR consulting firms in Nigeria, offering professional human resource solutions for businesses that want to grow both online and offline.

Our team is passionate about delivering tailored marketing strategies that drive measurable growth while also helping companies build strong digital teams. We help you hire the best digital and creative professionals, manage payroll, train staff, and provide all the support you need.

Transform Your Business with Expert HR Solutions

Bintu’s Art and Everything knows that businesses need digital and creative talented people. That is why our HR services focus on recruiting, training, and supporting digital creatives, the talent that powers modern marketing. We help startups, SMEs, and established companies build strong teams of graphic designers, content writers, video editors, social media managers, website managers, SEO/SEM specialists, and digital strategists.

Beyond hiring, we also offer employee training services, payroll, compliance, and performance management. This makes it easier for you to focus on other aspects of your business and generate more revenue.

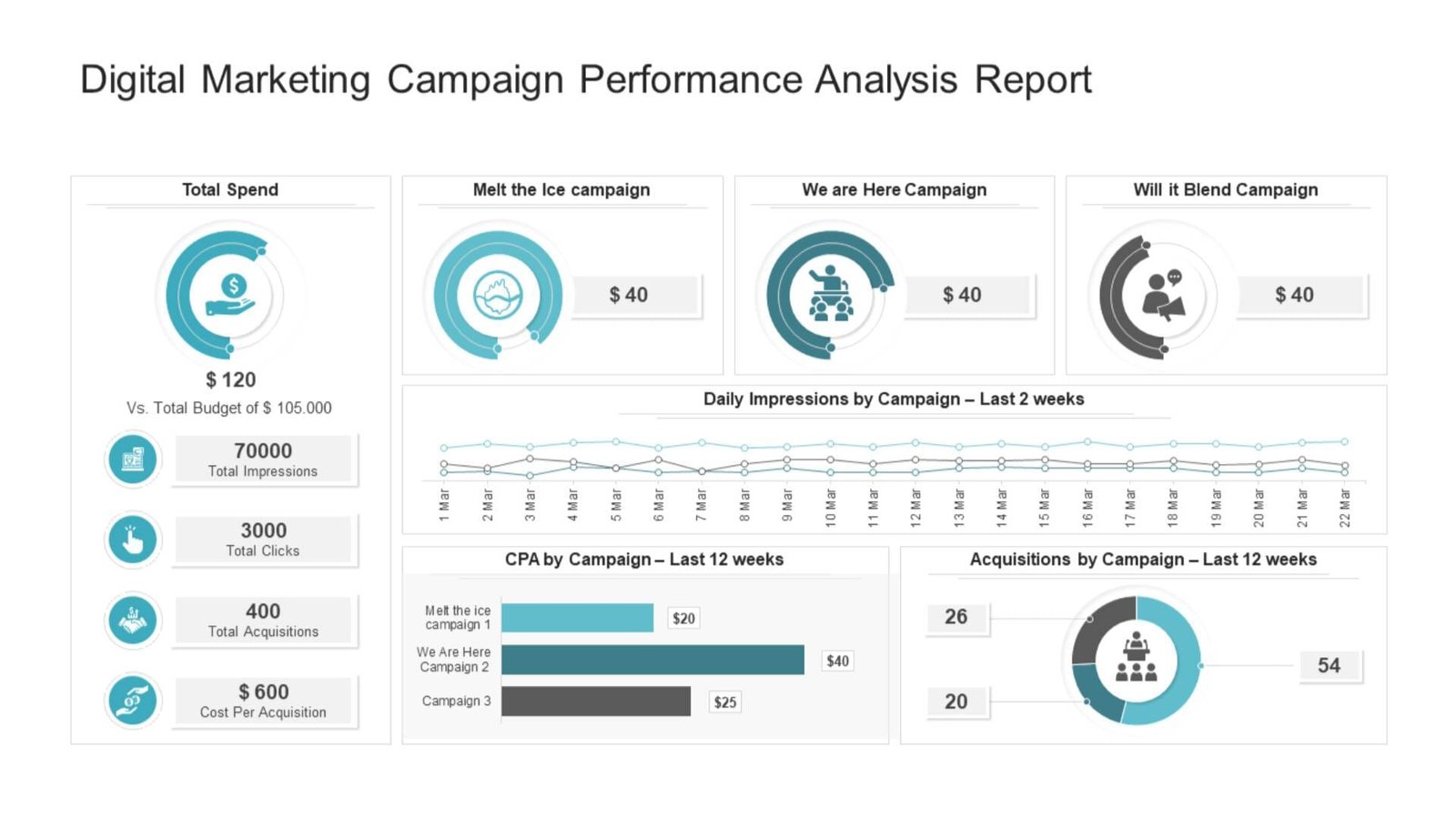

Grow Your Business with World-Class Digital Marketing Services in Nigeria

Bintu’s Art and Everything help businesses attract more customers, increase sales, and build stronger brands online. Our team creates digital marketing strategies that are simple, effective, and built around your goals.

We work with startups, small businesses, and established brands to deliver results through social media marketing, SEO, content creation, Google Ads, social media ads and email marketing. Every campaign is designed to connect you with potential customers and turn them into life-long buyers.

OUR APPROACH

With Bintu’s Art and Everything, you don’t have to worry about who’s handling your digital marketing. We hire the right talent to manage your business, monitor their performance, and ensure the highest level of expertise is applied to your brand. By leveraging our experienced team and our hands-on management, we ensure your digital marketing campaigns are effective and aligned with your goals, allowing you to focus on what matters most to your business.

Ready to elevate your brand? Get detailed insight into how Bintu’s Art and Everything can help you grow.

WORK WITH US

Join Our Team of Creatives

We are looking for passionate marketers, designers, and content creators to join our growing agency. At Bintu’s Art and Everything, we thrive on innovation and creative solutions. Ready to make an impact?

Explore job opportunities and freelance roles available at our agency:

Want to know more about Bintu's Art and Everything, our recruitment process and management structure?